I recently upgraded my computer's RAM to 32GB from 16GB. It was running fine but ever since I started doing more work with Docker containers, it felt like it would get sluggish once in awhile.

With now double the RAM, I figured I'd be golden. It turns out it isn't that simple. This week, the company I work for decided to start using containers for most of our project's dependencies, you know, SQL server, Solr, Redis etc... There is quite a few of them, but I've never had a problem running them simultaneously on my machine.

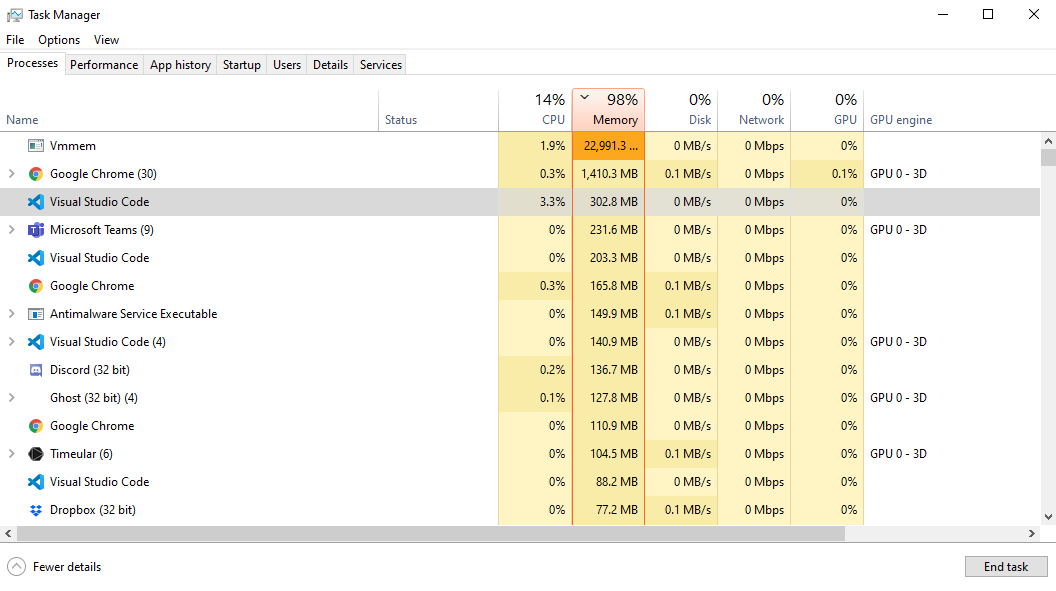

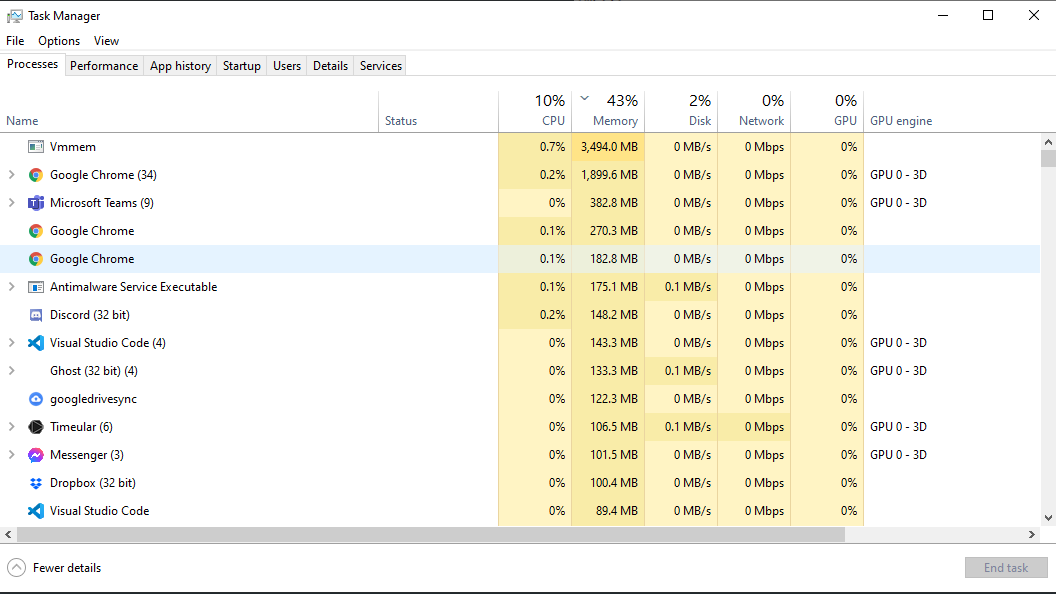

A docker-compose up later and my computer became sluggish again. What?! A quick look at the Processes tab in Task Manager showed that 98% of my system's memory was being used and 23GB out of my 32GB was being used by something called Vmmem. What the heck is Vmmem and why is it using so much memory?

A quick Google search later produced some answers. The short of it, Vmmem is the process that runs the Windows Subsystem for Linux (WSL), which is used by Docker on Windows. So Vmmem = WSL = Docker. This is a major over-simplification but if you never use WSL directly and are only using it for Docker, for you it's pretty close to reality.

Anyway, even though I now understood what this Vmmem thing was, it was pretty clear in my mind that there was no way the roughly 30 processes I was running inside Docker containers needed to consume this much memory. Most of them were relatively lightweight.

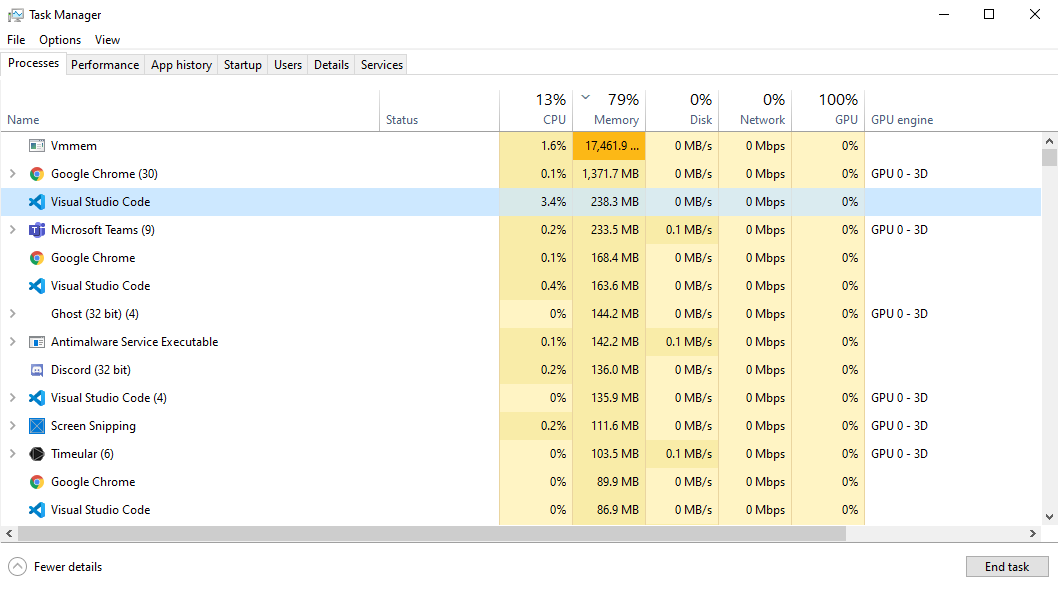

The first thing I started by doing was to bring down all my containers to see if this would help. After docker-compose down the situation improved. But not nearly as much as it should have.

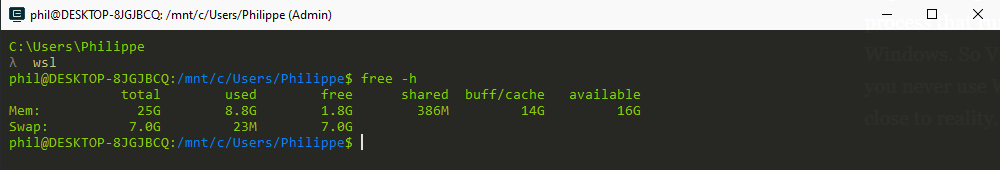

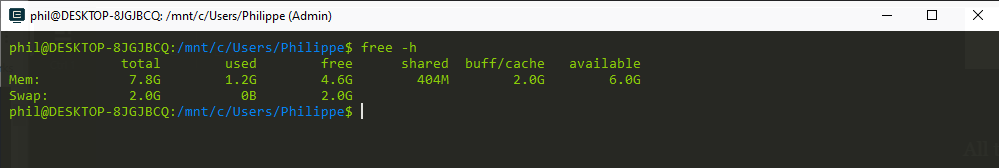

Why in the world is Vmmem still using 17 GB of RAM if I'm no longer running any Docker containers? Well, let's find out, shall we? In the terminal, I switched over to WSL and ran the Linux free command which gives you an overview of memory usage.

As far as WSL was concerned, it considered that it had rights over 25GB of my system's memory. At the moment is was using 8.8 GB (for what?!) but what is interesting is that it had reserved a full 14 GB as buff/cache.

You see, nature, and Linux apparently, abhors a vacuum. Since it considered that it had rights over 25 GB or my RAM but it didn't need all of it, it figured it would just earmark whatever it didn't need as cache. You know, because why not. It's not like the host machine has any need for memory on the Windows side of things (looking at you Google Chrome).

So why does WSL feel entitled to this much RAM. Well, it turns out that, depending on which build of Windows or WSL you are on, WSL gets access to 50%-80% of your system's RAM by default. Again, what?! 25 GB of RAM is way more than is needed to run a few fistfuls of Docker containers.

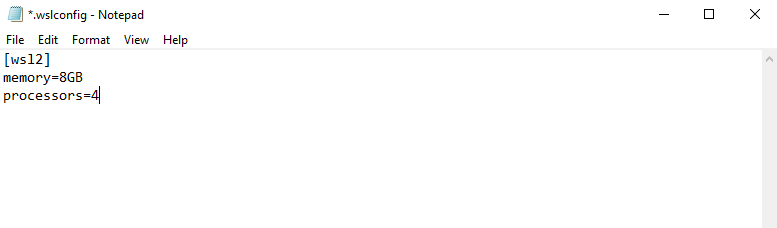

Thankfully, there is a way to fix this. To impose our will on WSL and get it to forget about that silly default setting, all we need to do is place a .wslconfig file into the root directory of our users folder: C:\Users\<yourUserName>\.wslconfig.

In my case I ended up limiting WSL to 8 GB of my RAM and, while I was at it, 4 of my 8 CPU cores - it has access to all of them by default.

All that was left to do was stop and restart WSL. A simple wsl --shutdown in the terminal will stop WSL. At that point Docker will notice that it has shutdown and offer to restart it. Let it do that for you.

And there you have it. We have tamed the hungry beast.

There are other setting options you can set for the Windows Subsystem for Linux in that .wsconfig file, you can find them here.

Comments